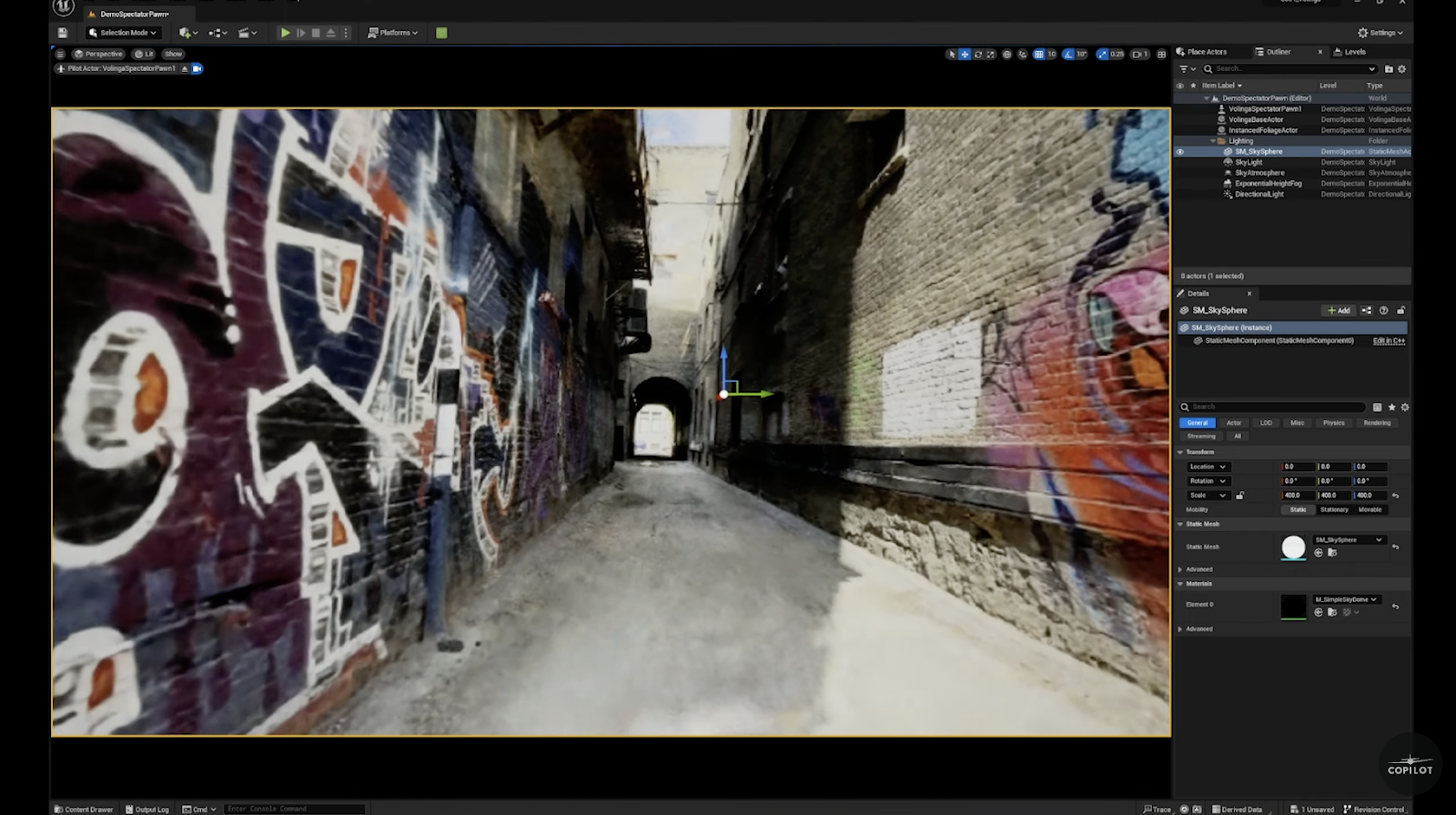

We've got something pretty exciting to share with you. We are about to turn an ordinary alley into a jaw-dropping 3D digital asset using nothing but a trusty iPhone and the power of AI. Can you believe it? All you have to do is take a simple video, and magic happens!

Now, hold on a second. Before you start picturing a bunch of stitched-together photos using photogrammetry, let us introduce you to the Volinga Studio. A cutting-edge AI tool that creates mind-blowing 3D environments for render engines like Disguise and Unreal Engine. And here's the best part—you don't need to fuss with the usual photogrammetry methods. Volinga.ai uses something called Neural Radiance Fields, or NeRF for short.

So, how does NeRF work its wonders? Instead of relying on individual photos, NeRF leverages trained AI models to understand the radiance, which includes colour and brightness, of the light bouncing off objects. Using this information, the model can then calculate the geometry of the space, even in areas that weren't fully captured by the input images. Isn't that spectacular?

That's why we chose this cool tunnel as our environment. It's filled with various materials that have different highlights, allowing us to showcase the true capabilities of Volinga.ai. Now, let's dive into the action and start capturing this incredible transformation.

Our next step was to film! Volinga’s documentation page has instructions on what types of camera moves you should do, and how to get the best result out of your input video…

The number one tip? Never stand still— that is, unless you’re doing a vertical jib shot.

This is what the video looked like from our iPhone. And in just a few short minutes, this part of the process is done!

Once we're done capturing, the next step is to upload our footage into Volinga.

Let's address the burning question though: NeRF versus Photogrammetry—what makes them different?

At a basic level, photogrammetry involves stitching together individual key points in images taken from different angles to create a 3D object. As long as you have a sufficient number of well-taken photos covering every part of the object, your scan can turn out great!

On the other hand, NeRF takes a different approach. It uses a trained model that understands how light interacts with objects and materials. By analyzing the input video frames, the model determines what the environment should look like and creates a stunning 3D representation. For NeRF to work its magic, the model needs to be well-trained with a diverse dataset, meaning it has been exposed to various environments in the past. And here's the craziest part—NeRF can even generate spaces that were not captured by the camera. However, the better the angle and camera positions in the input footage, the more impressive the output will be.

To accurately show you what we have created in just a few minutes using the Volinga Studio, check out the short video below.

Volinga Studio just came out of Beta and is now available to the public so don’t just take our word for it, if you want to see what it is capable of, give it a try yourself and let us know what you create!

The future of UE5 and AI sure is looking exciting.